#288: Our LLM Suggested We Chat about MCP. Kinda' Meta, No?

If there’s one thing that we absolutely knew would be coming along with the increased interest and use of AI, it would be… more acronyms! And, along with the acronyms, we pretty much could predict that we see a lot of online flexing through casual dropping of said acronyms as though they’re deeply understood by everyone who’s anyone. We tackled one such acronym on this episode: MCP! That’s “model context protocol” for those who like their acronyms written out, and Sam Redfern joined us to help us wrap our heads around the topic. You see, MCP is kinda’ like some other more familiar acronyms like API and XML. But, it’s also like… fingers? Sam’s enthusiasm and explanation certainly had us ready to dive in!

This episode’s Measurement Bite from show sponsor Recast is an explanation of model robustness from Michael Kaminsky!

Links to Resources Mentioned in the Show

- Cursor

- History doesn’t repeat itself, but it often rhymes

- Zed Agentic Engineering Series

- MeasureCamp

- GitHub’s Official MCP Server

- Zed ACP

- Opencode.ai

- (Podcast) Good Hang with Amy Poehler (including the Rachel Dratch episode)

- Bhavik (Bhav) Patel

- Manas Datta

- Superweek

- Christopher Berry

- Adversarial Poetry as a Universal Single-Turn Jailbreak Mechanism in Large Language Models

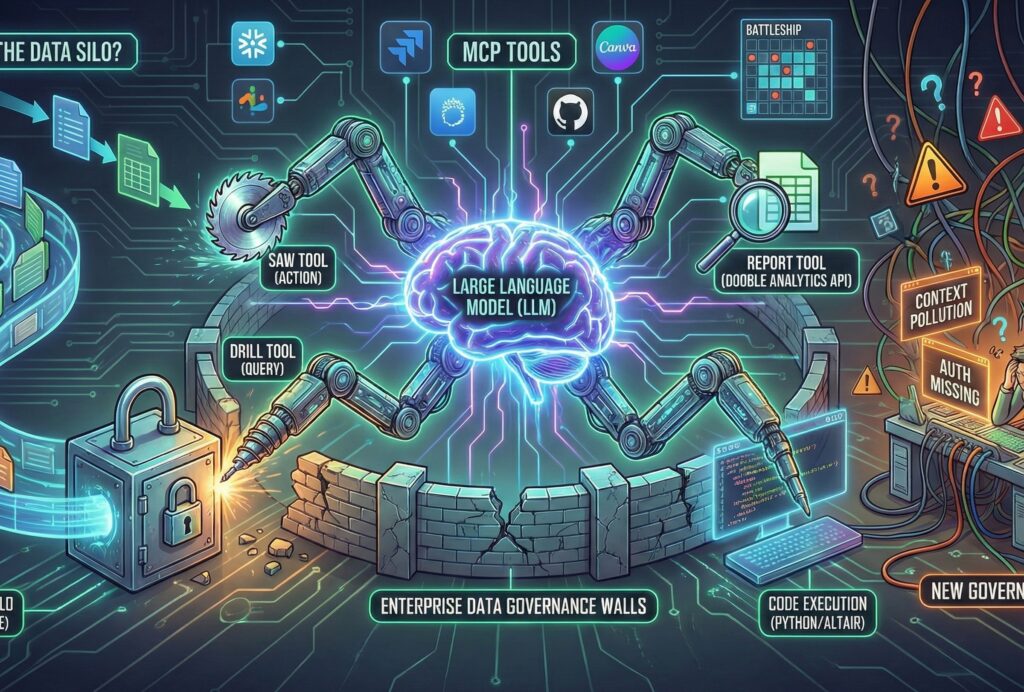

Normally, the image we drop on an episode is a photo taken by a human, and we attribute it accordingly. This time, given the topic, we just couldn’t resist, though: we threw the entire transcript at Nano Banana with a minimalist prompt to see what it came back with.

Episode Transcript

00:00:05.75 [Announcer]: Welcome to the Analytics Power Hour. Analytics topics covered conversationally and sometimes with explicit language.

00:00:15.15 [Michael Helbling]: Hi, everyone. Welcome. It’s the Analytics Power Hour. This is episode 288. We spent the last decade putting walls around our data, securing it, governing it, putting labels on it. And now the AI revolution walks up and is like, hey, can I see all that? Today, we’re going to discuss Moedel Context Protocol, or MCP. I mean, it’s an open standard. It promises to stop all the copy, paste madness and let AI talk directly to your data systems. Is it the end of the data silo or just the beginning of a new governance headache? Well, we’re going to try to establish an MVP for PMF of MCP all in one hectic hour. All right, let me introduce my co-host, Val Kroll. How are you doing? I’m good. This is going to be an interesting one, yeah. All my acronyms, yeah, that was fun. All right, Tim Wilson, always a pleasure. Likewise. All right, I just used the acronyms to make myself sound smart. That’s all. Let’s get that in early. And I’m Michael Helbling. All right, well, we need a guest, someone to help us dive into this topic. And we’ve got a great one. Sam Redfern is a staff data scientist at Canva, currently working on search and recommendations there and previously marketing measurement. Prior to that, he has held data roles at both Meta and IAG, and today he is our guest. Welcome to the show, Sam.

00:01:36.31 [Sam Redfern]: Thank you very much, Michael, Tim, and Val. We’re really excited to be here. First time caller, long time listener. Oh, that’s awesome.

00:01:43.76 [Michael Helbling]: Well, we’ll ask questions and take our answers off the air. No, no.

00:01:48.48 [Michael Helbling]: So.

00:01:51.27 [Michael Helbling]: Sam, I’m excited to talk to you about this, because obviously, all things AI are very of the moment, and everyone sees the term MCP. But I think if we just take a step back, maybe you could just fill us in on what exactly an MCP is, model context protocol, where did it come from, give us some background on the whole concept to establish the conversation today.

00:02:17.34 [Sam Redfern]: No worries. Super pumped to be talking about this. Let’s take a step back and think about what this is solving, in a sense. We’ve had access to these large language models tools for a little while now. In the early days of GPT2 and GPT3, before chat GBT, these things were like word calculators in a sense. I really like the analogy that it’s like you put the numbers into your calculator and you get the equation out. This is the same for almost words. Early large language models acted like that. And the innovation in the open AI space was to basically feed the output back into the input and make this resumable format. And what’s interesting about this whole, so let’s step back from MCP and branding and letting technical teams come up with the term for things because this is how we got NFTs as a term at the same time. But the core problem to be solved with these is that we’ve got something that feels a little bit like a person in the sense that you will give it some words and it will respond with some words back. Could you give that agent or that large language model the ability to do something other than just converse? And so the first application of tool use, in a sense, in large language models, actually came from the open-source LangChain team. And for those who don’t know what LangChain is, it’s a framework for building agentic experiences. And so you can have your anthropic model your open AI model or whatever you want, and then you allows you to piece together bits of technology to add context into the large language model to try and get it output. And so in April 2023, PR was submitted to the Landtrait project, allowing it to open up for the large language model to take a browser URL and to go have the back end application request the contents of that HTML and then bring it back into the context window of the agent itself. And if you think about it as like the core thing that it’s trying to do is it’s trying to get this I think about it as fingers in a sense, is it’s trying to give the large language the ability to touch something, a bit of information, bring it closer to it for it to understand. That’s at its core what these MCP tools are. MCP is like a brand term through Anthropoc. To say it’s a standard is to be very generous. But I’m really bullish about the concept of giving these large language models access to tools for them to be able to solve problems.

00:05:22.34 [Val Kroll]: So I have to admit that I really did think that MCP was just like the API for LLMs. But the more I was looking into this, it was kind of understanding that those fingers like you use in your analogy is really giving it more access than just here’s this endpoint. And it’s just a one-time thing. Can you talk about some of the things that you give it access to with those fingers or to grab to kind of give a little bit more color to what it actually does or what it’s capable of, if that makes sense?

00:05:54.59 [Sam Redfern]: Yeah, absolutely. So this is in the weeds of how they work. I think the context to understand is if you’ve ever done work inside of large enterprises and you’ve tried to create an application access username and password, it’s a total pain in the butt. to go get this thing. And then your infrastructure team is like, well, you’re going to change the password every three weeks. And then you have to have a cryptographic token to do something. And it’s in some sort of space. And the reason why, actually, Anthropoc had a previous attempt at tool use in October 2024, a month before MCPs were announced, where they had a system that would take over your browser and move your cursor around. And the reason why MCPs are running on your local computer is that your user account has access to all these systems. That’s why the early paradigms of these systems are as close as possible to the end user’s system. So the analogy I give on the fingers, in a sense, is inside these MCPs, you can give it access to any number of… The standard allows you to sort of use representatives any number of tools. And you have bits of information, right? So when the agent starts, it’s basically given a list of all the tools that the MCP server has available to the agent. And so has the name of the tool. And so let’s just do a really simple example of like us, like in our agent environment, we have two tools, right? One of them is called a saw and the other one’s called a drill, right? In our SOAR tool, we would describe the name as SOAR. We would say the description is it cuts wood in a single direction across a line. Then the inputs to that is the position of the wood and the depth. That is a finger for lack of a better term. That’s our first tool and our second tool might be drill. That allows you to drill a hole through the piece of wood.

00:08:03.11 [Val Kroll]: Okay, so I have to ask one more. Sorry, I’m hogging the air. But I guess the one other thing that I’m struggling to grasp a little bit is what was the need for standardization of this, like the protocol? Can you talk about what that is solving? Because what you shared in that analogy was great. I’m absolutely going to use that, and I’ll give you credit every time. but like why was there a need to standardize outside of like you know enterprises you know would feel more secure with that or you know the governance would be easier but is there any more more to it than just that piece?

00:08:39.22 [Sam Redfern]: In the adoption curve, we are so far away from the governance piece on this stuff. There’s a bunch of companies right now that are trying to put governance around these systems, and I’m sure at some point we’ll talk to maybe some of the potential downsides of the standard if we want to call it that. But the reason why Anthropic went down this path is In the technical details around how the LLM is trained, they have been doing this work of training the large language model to use this like special escape set of characters. So when the large language model is like Okay, I think what I need to do is use the saw tool and then it has this string of characters saw tool string of characters, and that indicates to the sort of the agent that’s hosting the large language model. Okay, I have to take the text below this. and send it into the tool itself with the input parameters that it needs for it. Anthropic had this huge lead because they’ve done the work of training their large language models to for tool use and using their reinforcement techniques to basically say, this is what you have to do. And this was this huge lead that anthropic had for a couple of years, in a sense. Everything feels like it’s a couple of years. It’s really about eight months, right? Until other people started trying to solve this problem. Opening, I had their own sort of call procedures kind of method, like I think they were called functions. And it was a very similar kind of thing. Like anyone could have come up with a standard. The core problem they were trying to solve is how do you give the large language model a hand for it to basically make decisions about what information is pulled towards it or what information or what actions it takes when it’s pushing out.

00:10:36.40 [Tim Wilson]: This is slowly coming a little more into focus and still pretty damn fuzzy for me. I know recently it seems like there’s been a lot of chatter about Google Analytics having an MCP server. Is that the right terminology? That is something that that the Google team said, we’re going to produce this to basically make the, this is a saw. This is what it does. These are the inputs. And it’s just a much a lot. I mean, your analogy was very, very simple. Is it as simple as that, which has me going back to saying, well, when Val said, It’s like an API for LOMs. It sure sounds like an API for LOMs. And I’m missing where that analogy is breaking down.

00:11:35.02 [Sam Redfern]: I think you can use it. There’s a lot of analogies of talking about these NCP tools as being the early dates of APIs and stuff like that as well. I think there’s an extra bit of the near direction of where these systems are moving, which is more interesting in the API part, but just to come back to that. The way I think about it, APIs is a great way of talking about it, and there’s lots of people doing weird fun things with these tools right now. If you remember, I think some of us on the call are old enough to remember the early days of web 2.0 and people were making APIs for like the weather and it was open and everything was fine and you know we were very far from like the standardized way of we think about this sort of stuff now right like it’s uh The way you design an API is very standardized now. I think the thing that’s different is one, we’re dealing with this huge amount of non-determinism, right? And we’re dealing with all of these different terms and terminologies that exist. So I think everyone on the podcast might have heard of the term agent, right? And so an agent is the idea where you have a resumable output. You have like some text that is the system prompt, and then you have this resumable conversation. There’s another term that’s being formed right now called a harness. A harness is an idea where you have an agent and you have a tool plugged into the side of it. That has a domain of knowledge attached to it at the same time. Cursor is an agent. The claw desktop is an agent. Oh, sorry, sorry. Cursor is a harness, right? It’s got access to all these different tools. I think the, I actually think of NTP and where it’s at right now is more akin to these digital document formats like XML, right? So we started with XML and the number of people who are writing XML these days is almost none. However, the amount of change of this standardized document format then brought us to JSON and now it has unfortunately brought us to YAML and Markdown. We are at the XML stage of this development is that this is going to tool use conceptually attaching to large language models through agents and harnesses is That is going to stay for a long time. Whether it’s the MTP standard or someone comes along with a better standard, then we’ll see how that goes.

00:14:12.44 [Michael Helbling]: You know how developers got the AI engineer role? It’s time for the rest of us. I think we’re witnessing the rise of the AI analyst.

00:14:22.32 [Tim Wilson]: OK, does that just mean asking a chatbot to do math? Because I have Excel for that, Michael.

00:14:28.43 [Michael Helbling]: Well, no, Tim. I’m talking about Ask Why. It’s full stack analytics. I ask a question in plain English, and the product prism orchestrates the whole thing. You can pull in data from Excel or BigQuery.

00:14:42.10 [Tim Wilson]: Hold on. You’re sending BigQuery data to an LLM? Security is going to have a heart of track.

00:14:48.17 [Michael Helbling]: Well, that’s the best part. Ask why doesn’t upload your data. Explain? Well, it creates a semantic layer. It sends the context to the LLM. The LLM writes the code, and that code runs locally on your data. Your actual numbers never touch their servers, so it’s totally traceable.

00:15:07.61 [Tim Wilson]: So, I get the automation, but my data stays safe and secure?

00:15:13.21 [Michael Helbling]: Exactly. Plus, it remembers context, so as you automate routine tasks, it stores those, so you don’t have to explain it all again the next time you do that same task.

00:15:23.83 [Tim Wilson]: OK, I’m listening.

00:15:25.81 [Michael Helbling]: Where do I get it? Well, it’s even beta right now, and you can go to ask-y.ai. That’s ask-y.ai. You can get ahead of the curve and join the ranks of the AI analysts.

00:15:39.33 [Tim Wilson]: And because we like you guys, use code APH when you sign up, and our friends at Ask Why will put you at the top of their wait list.

00:15:48.17 [Michael Helbling]: Yep, stop pasting data into black boxes. Get Ask Why.

00:15:52.99 [Tim Wilson]: that I’ve had the XML question as well as whether it was, because I remember that being coming from an HTML world and then XML came out and it’s like, look, XML doesn’t give a shit about what you’re rendering in a browser, but it is this structured world. So I feel like, and then JSON, I sort of understood because of the XML. And that makes sense because there were, there was talk of saying different, applications uses would say using this XML structure, let’s define kind of specifically how that is going to be used in the context of this financial services thing. So you’re saying that is also a useful if imperfect analogy?

00:16:40.80 [Sam Redfern]: Yeah, look. Uh, history sort of rhymes more than copies, right? Like, um, you know, it’s, it’s going to, um, uh, like, It’s the first time in software that we’ve had this amount of non-determinism to deal with, right? You think about what success has meant in software development or data before, and it’s like some human’s ability to remember some random function as part of a library and be able to write that code as fast as possible and do it in as perfect as close to grammar as possible. The problem with this new world that we’re going into is the skill of dealing with non-determinism is not closely overlapping with that historical set of skills. It’s going to feel different and people who talk about the vibes of a model, there’s some truth in it in a sense. Getting a feel and it’s true with tool design. When you’re building an MCP, so we’ll just go back to the MCP paradigm, And so you’re thinking about the tools that you’re building and the fingers that you’re giving this agent and this harness access to. When you’re starting out, you’re doing kind of the early playful part of programming, in a sense, right? Where you’re just like, oh, does this connect to this? And when I run through it, what problems do I see with it? I do think that, like, It feels like those early days of these standards and people are playing around with them. And then when you want to get serious about the thing that you’re building, you’re then looking through the agent logs, you’re seeing what tools it’s calling, you’re seeing the parameters, you’re seeing how many times it correctly passes in the correct parameters and everything like that.

00:18:30.07 [Tim Wilson]: So let me hit the non-determinism point. And maybe I’m going to go back to using the Google Analytics MCP as an example that the The client, the application that’s hooked, the agent that is hooking in and using the MCP, say it’s a LLM, it’s core. And maybe I’m missing the non. I’m thinking of that as being it’s a it’s a probabilistic thing, but it’s hitting the MCP to get stuff back. Is the MCP necessarily also kind of non deterministic? Or is it no, like the inputs may be kind of floating around a little bit, but and or maybe it just depends on what it’s an MCP for. If in the Google Analytics example, I would say if the input is users in the last month that the MCP would say, well, as long as that, or is it that input’s going to come in with the little squishiness in it, and it’s up to the MCP to say, I got to figure out what I should go and pull and return.

00:19:39.91 [Sam Redfern]: Yeah, and I think this is a good point to talk about this interesting dimensions that’s coming up recently. If I was going on a league team, which I’m definitely not, and I don’t think I’ve used Google Analytics for over a decade, and I missed many of the big transitions. I’m probably not the best person to talk about GA, but So the Google Analytics MCP is going to have this problem where they have these dimensions and measures and breakdowns, and then they’re obviously trying to do it on the cheap on the inside. And so they’re only storing some of the information. So they’re going to have a basic report tool, so it’s going to be called the report tool. And the name of it is going to be traffic analysis. And it’s going to be put the date range in, and then it’s just going to return back a very minimized array of what the traffic has been.

00:20:41.10 [Tim Wilson]: Report tool, that would be like a saw, but there’s going to be separately a drill. When you’re saying a tool, that is a tool that’s available as part of the MCP. Okay.

00:20:53.24 [Sam Redfern]: That’s right. Now, where this gets interesting and anthropic announced for lack of a bit of time on the, I think a couple of days ago, announces code execution with MCPs and allowing you to actually write code. This is the thing that I’ve actually found over the last couple of weeks, which is it’s way better getting the LLM to write code to interact with APIs or SQL or something along those lines than it is to actually give it access to all of these tools and all of these intermediary steps. If and you might have seen this from your own experience of, you know, you’re probably spending a little bit less time hacking away at a piece of sequel, getting it to form exactly what you want it to these days. You know, you’re probably spending a bit more time of like here’s all the table space that I’m working on. here is a thing that I want to query, here’s what the outputs look like, and then you’re sort of having this sort of feedback loop where you’re doing that work. And my guess is, is that if you wanted to build a more sophisticated MCP, and if you were Google, you would actually lean into this concept where you would let the agent go build a little piece of Python code or JavaScript or something along those lines to query a bunch of known API endpoints to form the data back in the way that you want it to. Snowflake has its MCP server and Canva would make something called data MCP, which takes all of this data information we have and allows the LLM access to understanding how to use it. you’re really doing this piece of work around context engineering and you’re trying to think about like what is the LLM going to put into this tool for then it to get this output out. And so Michael to say, and so your sort of question here is that the MCP tool itself is deterministic, right? So it is an application in the traditional software sense. I think a lot of these MTP tools are sending data outside of out to the internet, right? They’re connected into API or a SQL database or something along those lines, but you can have deterministic tools inside of the MTP server as well that’s locally that’s connected. So you could just have like a calculator tool and it just adds numbers together and then returns exactly the right number out. You know, we’ve heard the joke of counting the number r of r’s and strawberry, right? So you could have a little Python function that just counts the number r’s and strawberry and it’s just like, all right. the R counter tool, put the word strawberry in, and this is how many R’s you get back. And so this is the whole idea of, and this is, I understand I’m going to do shout outs later on, but Zed, which is an ID, has an agentic engineering series. And what they’re saying, And I just think that they have this really great framing of the problem that we’re working on today, which is how do you take the advantages of non-deterministic systems and couple it with the advantage of deterministic systems to get something more than the sum of its components?

00:24:00.95 [Val Kroll]: So I would love to take a little bit of a turn because you’ve teased it a little bit, but I’m so eager to hear some of your favorite use cases and examples and that you can talk about. And I know you mentioned before, it’s still early days, but if you could talk about some of the things that you felt like weren’t possible for or too much effort for what it was worth in the past, but now it’s unlocked this or solved this for you.

00:24:27.20 [Sam Redfern]: I’ll talk a little bit about some of the stuff we’ve got working inside of Canberra at the moment. We use a large database vendor, which I will keep their name out of just so they don’t get in trouble. They’re going down this path of building out their own separate MCP tools and stuff like that. But what’s interesting and what I think is a big opportunity for people in this space is that building these tools for your organization is actually the critical skill that we’re going to see in the coming period of time. Every organization or company is really different, in a sense. And they made a database choice five years ago, and they made like a data transformation tool twice like four years ago. And you have all the incremental knowledge and information that’s built on top of that, which is going to kind of be unique to your organization in a sense, right? And I think there’s going to be vendors who are building tools in this space. But if you’re sort of a midsize company, like I think something to really think about is building customized versions of these tools that actually work with the flows of your organization and really having teams that are like building, thinking about these agenting and engineering practices on how to actually automate parts of the work that people don’t want to do. So some of the use cases that I’m sort of playing around at the moment, just so just the last week I’ve been working on using the Altair Python visualization library and actually building a Python based sort of sandbox environment for it to run the Altair code. And so The way this works is you just put the SQL statement of the data that you want to pass into the Altair code, and then you have a Python sandbox part of the field of the tool. It just puts 300 lines of Python into it, and it builds the visualizations out of it, right? So using these really nice Python visualization libraries has always been a pain in the butt unless you tattoo the way every part of the application works on your arm so you can know exactly how it works. But again, we don’t have to worry so much about grammar anymore, because if you feed these systems examples, they can come back and help you visualize this way faster. And I think that’s where, you know, Zed has this post where they, their concept is leverage, not magic, right? And what we’re trying to do is we’re trying to take our staff members and we’re trying to make them move faster and explore more in a shorter period of time to get to a better end outcome. Just on other sort of like interesting fun use cases, I’ve been, At home, I’ve got my own little sort of home lab kind of thing and stuff like that. And part of playing around with that is there’s all these command switches and stuff like that. And so I built a custom NCP server for home that documents all the different applications that I use in the CLI. And it has all of the context of it. I basically put in a free text field of what I’m trying to do. It then uses a search engine to search over the data. It then takes that context, puts it into the LLM, and then the LLM goes and gives me all the command switches and stuff like that. Another one is, I think the reason why Moe recommended me for this is I gave a presentation at MeasureCamp about, at MeasureCamp, you have to come up with a talk title, otherwise people don’t show up. I said that MCP is the real apex predator for your job in 2026. And obviously, you know, I don’t think that’s true. But so I wrote that someone was like, you need to give a talk sound. I’m just like, fine. So I wrote that part at 11 o’clock, I then vibe coded up a maybe the game battleship. And so I made a version of battleship that uses MCP tools as the the fingers that the LLMs can use to play against each other. And then I built an agent harness where I could get different vendors, MCP tools to actually fight the game battleship against each other. And then you could actually watch the turn by turn thing of watching them compete against each other. And they would then have their thinking of like how they thought about the strategy of the other player. And then you could like change the prompt and be like, okay, you are going to be the random player and you’re just going to do the most random things you possibly can. And then the other one is like the most strategic player of like, this is the common moves in battleship. I’m going to do this and stuff like that. And it’s just, it’s like, like, it’s a whole new paradigm of like weird things to play around with. And like MCPs are just like this, this layer for you to like do this joining between deterministic and non-deterministic systems. But once you start playing with these systems and you’re finding different ways of interesting things to do with them, like, you know, it’s, it’s, it’s, It puts the fund back into sort of like the early stages of programming again.

00:29:38.16 [Val Kroll]: So you giving the context of like deterministic, non-deterministic is really that’s helping it crystallize a little bit in my mind what some of this is. But I do want to go back to when you first started talking about some of these examples, you were talking about how every organization is different. Everyone’s working with a different stack. Everything has to be kind of in context of what’s going on inside your organization. Can I just go back and just repeat a little bit one of my earlier questions. What is the value then of the standardization versus it being custom inside of your organization? Is it just about the ability to leverage those other tools that are using MCP or is there internal benefit too? I guess I might be just, it’s not clicking for me. I would love to just hear you share.

00:30:27.67 [Sam Redfern]: No, no, no, no. Okay, so MCP is the thing that allows you at the moment until someone comes up with a better standard. And we should probably talk about some of the downsides of MCP as well. Just all the criticism, sorry, the criticisms as they stand of MCP, but MCP at the moment allows you to bridge that gap between deterministic and non-deterministic systems. You’ve got the vendors, and the vendors are going out, and they are building their own customers. MCP is right, because what they want, because what they’re getting, is they’re getting pressure from leadership teams of like, okay, we need to get some AI in this organization right now. I have called desktop on my computer and I want to be able to query this information directly from Claude and have that come back to me in a sense, right? And MCPs is like a path you can go down there, but the problem is that you’re wrestling with the non-deterministic nature of these systems when you do that type of connection in a sense, right? And what you actually, this is where building up that practice inside of your organization is really important because when you get into the detail of how these systems work, you realize that giving senior leadership teams access to the raw thing that directly queries the database is problematic for lots of different reasons around you’re not able to check it, you can’t give it a system prompt, all that sort of stuff. So MCPs, the standard, if we can call it that, is just the little connecting block, in a sense. the practice of what I’m talking about about the non-standardization inside of organizations is that you can totally go use the vendor solutions, right? But it’s always going to feel like it’s through a fuzzy piece of glass, because unless you’re doing exactly what the vendor has, right? Like, if you’re If you’re 100% Google shop, and you’ve never used anything other than Google, then maybe the Google stack is going to be great because that’s what they’re going to be using internally. And they’re going to be copying from that of how they’re building it. But I don’t know about you, but most organizations I ever interact with is it sort of like a collage of different solutions. And the money is in getting them to connect together, right? Yeah, templatized versus custom kind of.

00:32:38.05 [Tim Wilson]: Altair, take Canva, take Snowflake, take Google Analytics and MCP for those. Is there the option that Canva or Altair or Snowflake or Google, and those are intentionally very wildly different types of platforms, they can sort of create an MCP, one of these connectors that say this is kind of generic, but there’s also an option that I as a user of Canva with access, I could also build my own MCP, my own connector, or is it okay?

00:33:20.36 [Sam Redfern]: So you’re raising on a really good topic, which is called context pollution, right? So there was a criticism of GitHub’s MCP server where it has like 24 some number of tools, right? And the majority of people use like three, right? And so I push. If you get into the details of how NTPs work is that they repaste the entire list of tools and the tool descriptions and all this sort of stuff with every single message that goes through, right? Because they are trying to solve the context engineering problem of, like, The LLN needs to really know what all the tools are at every single turn. And you can go and add 70 tools to an agent harness. And you should do that to watch it not work, because it’s very entertaining. And you can suddenly watch all of your contacts disappear and have all sorts of problems, right?

00:34:24.92 [Michael Helbling]: You got extra tokens to spare.

00:34:27.04 [Sam Redfern]: Go ahead. You know, somebody would go win the token awards or whatever it is. I did the other day, right? You know, some people need the token trophy. That’s great. But the like your job as an engineer or a technology person inside of an organization is to do it in the most sensible, reliable way possible, right? And you’re trying to harness these nondeterministic systems to get the best outcome. And part of the reason why you go and build that custom harness that is fit for purpose for your organization and has different flatters depending on exactly the task that you’re trying to get into is you’re trying to, it’s in the name, you’re trying to take that harness and you’re trying to constrain this non-determinic system to only work in this particular domain. I think a lot of people, when they talk about these MCPs, they’re talking about it from their experience of having clawed desktop on their computer and connecting it up to JIRA or some other thing like that. That’s a totally valid use case. I don’t have to open JIRA anymore. I think the favorite part of my job now is when someone assigns me a ticket, it’s the only thing I have connected to the clawed desktop on my computer. and I interact with all of my JIRA tickets through Claude. I’ve solved the Atlassian interface problem by just never having to open it. That’s for me as a human here, but when we’re talking about making these systems do useful things in your organization that you can’t convince engineers to pick up, or it’s really boring work, or it’s testing work, or something like that. That’s where these systems kind of shine, right? We’re not trying to put someone out of a job or anything like that. What we’re trying to do is we’re trying to get that as tasks that are not particularly enjoyable, like documentation, testing tracking, all this sort of stuff, like building custom harnesses around that to help engineers make the best possible decision when they’re building something, That’s the real advantage of these tools.

00:36:30.89 [Michael Helbling]: Yeah. And I think, Tim, also, the Google Analytics example is tricky because it’s very limited. And it basically is an API layer to Google Analytics. It’s not really giving you more MCP-ish type of interaction. So I think it adds to the confusion a little bit. Because it’s like, you can do all the same things you can do with the Google Analytics MCP server with their API. It’s just call this function. But instead of you writing the query to the API, the LLM does it for you. But it’s not more stuff.

00:37:04.57 [Tim Wilson]: How does the GitHub MCP server? So I have two questions. One, it sounds like a lot of platforms maybe of those, if they’re 24, and I’m assuming it’s not exactly 24. However many tools there are within the GitHub MCP server that a lot of them are just a layer to the API, and maybe there are some that aren’t. But if you were saying, we do want to use a GitHub MCP server, this has got too much, would it be? I’m going to get their MCP server and I’m going to whittle it down and then probably check it back into GitHub just to make things confusing. But do MCP servers get, there could be the official Uber generic one developed by the platform and then somebody says, yeah, I need to make one that’s just a much narrower scope and maybe add some flavor on it or does it not work that way?

00:37:58.20 [Sam Redfern]: Yeah, great question Tim. And this is why the agent, like talking about agentic engineering and harnesses is really important because in a harness, you say, these are the MCP servers only have these tools, right? And so you can take the GitHub MCP server and you can say, here’s your three tools, deal with it. Sorry, I just, you know, let’s not get into the accuracy of AI overviews, but according to in June 2025, apparently exposes 51 plus tools. Okay. No, okay. You know, like, but this is like, if you’re in a world where you Do you narrow that down to a very limited set of the tools? And you can see this in the cloud desktop thing. If you load an NCP server on the cloud desktop, you have little switches where you can turn on and off tools in a sense. The intention of these systems is that you narrow the scope down to exactly the problem that you’re working on and just that. But what’s interesting, Tim, is why bother having the GitHub? If you’re doing the coding yourself and you’re using it inside a cursor or something along those lines, it’s like, why bother adding the GitHub MCP server at all? Why not just get the LLM to execute something in the command line with all the command switches of the GitHub CLI?

00:39:13.50 [Tim Wilson]: Also, is this getting us to the downsides?

00:39:18.24 [Val Kroll]: I was just going to say, he risks you a bit chomping at the bit, Sam. Let’s hear it.

00:39:24.80 [Tim Wilson]: I mean, there’s part of it feels like this is, it’s new enough and wild, wild west to know there’s like, there could be a governance issue that it would be very easy to embed MCPs into an organization and they’re not well thought out. They’re not well built. They, they, I mean, that just, it seems like there’s just a governance thing like anything that gets rolled out. that one Sam creates something and all of a sudden the entire organization is dependent on it. And maybe Sam’s not very good at, you know, or just half-assed it on a weekend or something like, I don’t know. So that’s my, I’m throwing that out that it seems like there’s a governance risk when you’re being, you’re able to do this stuff so quickly and roll it out. Is that one of the downsides?

00:40:13.13 [Sam Redfern]: I mean, building, and this is something Canva does really well, right? Like building AI tools for people to use in the application is just really different to building stuff internally. Like we are still at the early days of this stuff and Canva’s ecosystem team building out really strong solutions to the space of whether canvas empty server or client or something like those lines. So there was like the professional teams who are like building this sort of stuff for external consumption. So in open AI, you can, you know, you can. use the LLM to interact with it. You can use GPT-5 or whatever they call it these days to interact with Canva and modify your designs and stuff like that. That’s all using this style of tool technology in a sense, right? And there’s a lot of governance that’s been there, right? There’s a lot of thinking about permissioning, thinking about what information we’re giving to the LLM, what actions we’re giving to it, what are the actual actions that change something. One of the downsides of giving the LLM access to your terminal command line is that it could just delete all the files in the directory or something on the inside. I think my favorite one is where an engineer is trying to get the LLM to write the code so it passes all the tests and so it solves the problem by deleting the tests and it’s just like problem solved, I’m done. a technically correct, the best type of correct, but actually, no, that’s not what I wanted. And so this is why that agent harness framework is really useful because that’s where we’re like, here is this domain of a problem. Here is this very finance set of tools. Here’s how I want you to sort of exactly work on this particular part of the problem. And I don’t want you to have this like long chain where you’re sort of like jumping between things. I just want to create the agents of instance, have it solve one or two problems, and for the agent instance to end, so then we can move on to the next problem. That is why we’re trying to solve and harness this non-deterministic nature. Some of the criticisms of this MTP standard is like, one, it’s not a standard, right? Like a standard, if we think about it as from the Internet Enduring Task Force, the W3C is like a collection of for-profit companies coming together and sending some of their best engineers to basically have like a very disgruntled call with a bunch of other engineers from other sort of for-profit companies, right? Like I don’t, as far as I understand, it’s kind of just anthropic building this internally, polishing stuff on their blog, they picked up FastMTP, which is like an open source thing. And they just said, all right, this is the standard. We’re going to use this as our standard library and sort of extend it out and stuff like that. you know, coming back to that governance is like, this is not a structure that feels like it’s ready for like, you, it’s buyer beware on the internal corporate governance stuff. And the way you design these systems is really important. And so some of the data tools I build internally, it has no ability to write information to the database, right? Because that is completely like, we’re just not ready for that kind of world, right? And maybe in really select kind of instances where there’s a really strong, hard-to-surrounder and you have like a checking endpoint and all sorts of other stuff like that and things like that as well, things like Langchain are really useful. But we need to, that’s why I think most of the value of this space is still in the internal application use cases, in a sense. That’s where you can do a more experimentation and worry less about the strangeness of the internet and the internet and AI and all the problems attached to that. But when you’re developing these tools internally, you have a team of you know, a handful of engineers and like, you can make their lives like tangibly better because they don’t have to put context into their mind at a particular part of the problem. And they can just have that answer come back. And even if it’s right 90% of the time, it’s probably better than when you got your junior data scientists sort of do it in the first place anyway. So, you know, that’s that’s the like, that’s the challenge. As far as One of the other criticisms of Anthropox MCP is that it doesn’t have authentication baked in. Moest of the MCP, so it’s counterintuitive having this term of MCP server and client in a sense, right? And what happens is you’re literally running a little application. You’re like, you know, Python run this like Damon or something along those lines. And then that is just talking to the it’s like running a local web server in a sense, right? And that is the security model that has been sold for in the early days. And that’s why the Zed has their ACP agent. What is it Zed? ACP. I think in a couple of years, we’re going to be talking about the agent client protocol as maybe a better way of building this sort of stuff. Everyone sort of agrees that the ACP protocol is probably a better representation of where we’re going in this space. And it is an open standard. I don’t think they’re sort of like the W3C or the internet engineering password style of standard. Back to that XML example, I don’t know about you. I don’t read a lot of XML these days. We’re going to be moving to something else, but I’m very bullish on the concept of tool use in these applications and giving large language models these fingers to do things.

00:45:53.98 [Tim Wilson]: Is there any movement? It sounds like Zed has stepped in and done a little bit of this. Is there any movement to say, like the W3C had its different groups and came together that we should get?

00:46:08.82 [Val Kroll]: Didn’t Google and Microsoft and OpenAI, didn’t they all adopt it? Am I totally misunderstanding what that means?

00:46:16.54 [Tim Wilson]: that it’s another thing to say, here’s our, here’s our, we got to solve authentication. We got to have a recommendation and a standard for how authentication is going to be handled means they can’t just say, like it’s not there. That’s something that new that needs to be incorporated in a way that they say, yeah, we think this is generally going to work for most. We can all work with this, right? Cause that’s, I mean, it’s not, it’s not a static. I mean, I guess let me ask that question. It’s MCP. How static is it? Like when HTML came out, it wasn’t like, okay, we’re done. Well, there was a bunch of other stuff that was needed and browsers added functionality. And so it was kind of, it naturally had to evolve and is MCP the same thing that it needs to, yeah.

00:47:04.94 [Sam Redfern]: Yeah. So there’s a really interesting part of this, which is that there is a, I think there is a recommended output format to MCP servers that as part of their standard. But I think what’s interesting about this is that like because of the non-deterministic nature of these systems. And because you can, I don’t know if you’ve ever played around with this, but it’s always good fun is to, you can have the start of your question in XML, then you can do the middle bit in YAML and then the end bit in JSON. And the large language model doesn’t skip a beat. And it’s just like, oh yeah, sure, I understand this, right? Like it doesn’t matter as much is kind of the context of this problem, right? Because We required these standards in the early days of the internet because they were purely deterministic systems with incredibly strong grammars. I just don’t think it matters as much anymore. That’s why I don’t think there’s been the same pressure to standardize because you don’t need to standardize in the same way. The only thing that matters is do you pass the tool call threshold in your large language model. And I think it may be, you know, like rather, rather than a very deliberate standard like TCP IP or IPv6, I think it’s going to be more along the lines of the QWERTY keyboard, which is like, we just kind of picked it because it was there first, not because it’s better. and MCP will probably change to something else in the future, right? But the primitives that make it interact with the large language model, I think, are now baked in enough that it would be surprised to see if we move away from that. And all we’re going to do is we’re going to find new ways of taking those primitives and doing this code execution thing. So I gave my example of this charting sort of like MCP extension that I built. Like all we’re going to do is we’re going to take the same primitives, but then we’re going to like do wildly different things with them that people didn’t think was possible before.

00:49:06.08 [Tim Wilson]: And at some point, that will have shifted to a point that it’s got a new label. And it’s like, oh, remember, it was just MCPs. Now we have something else, which is grounded in all that we learned from MCP. Okay.

00:49:17.86 [Sam Redfern]: Yeah. Now it’s going to be ACP. It’s going to be, who knows, whatever. I look forward to watching this name change over time. I’m sure there will be an XKCD comic at some point. There’s the end pop on standard XKCD comic, and we are not immune from that paradigm, which has been true in software for long enough.

00:49:40.98 [Tim Wilson]: I’ve brought up that particular strip, I think, on two of the last four episodes. One was on semantic layers and one was on

00:49:48.77 [Michael Helbling]: Yeah, we’ll check back in in six months because certainly things will have shifted quite a bit. All right, we do have to start to wrap up. And as we do that, let me jump into a quick break with our friend Michael Kaminsky from Recast, the Media Mix Moedeling and GeoLift platform helping teams forecast accurately and make better decisions. Michael’s been sharing bite-sized marketing lessons over the past few months to help you measure smarter. Over to you, Michael.

00:50:17.39 [Michael Kaminsky (Recast)]: When we perform statistical analysis of data, what we really care about is that we are discovering actual truths about the world, not random artifacts of the particular data set we’re looking at or the analytical methods we’re choosing. We want generalizable analyses, the kind where independent researchers answering the same question would converge on similar results. This is all another way of talking about a hugely important idea in model building or statistical analysis. Robustness. Without robustness, even a small tweak at assumptions or small changes of the data will spit out dramatically different results. Results that aren’t showing true causation or reflecting reality, but just picking up random noise. So how do we put this into practice when doing statistical analyses? We can randomly resample from our dataset or even randomly drop small amounts of data and see if the results are being driven by one particular outlier observation. Similarly, if we’re running a regression analysis with control variables, we can check how sensitive the results are to different control combinations. If the findings change dramatically depending on which controls we include, we should be skeptical of the overall results. The more robust our results are as things change, the more we feel confident that other analysts or researchers will end up drawing the same conclusions and the better chance we have of finding some underlying truth.

00:51:31.49 [Michael Helbling]: Thanks, Michael. And for those who haven’t heard, our friends at ReCast just launched their new incrementality testing platform, GeoLift, by ReCast. It’s a simple, powerful way for marketing and data teams to measure the true impact of their advertising spend. And even better, you can use it completely free for six months. Just visit www.getrecast.com slash geolift to start your trial today. All right. Well, one of the things we’d like to do is go around the horn and share a last call, something that might be of interest to our users. Sam, you’re our guest. Do you have a last call you’d like to share?

00:52:06.23 [Sam Redfern]: Well, I mean, obviously, thinking about this sort of this agentic engineering thing. OK, so I’m going to get in trouble by doing two. One of them is go to z.dev and go read about and always a problem. Go to z.dev. and go check out under resources and they have their agentic engineering series about the future of software development. I think it’s a great grounding of where we’re going in this industry and I think they lay out a really great vision of what this could be. The last one is that I don’t actually like Zed’s agent. I think one of the most important things here is to go get your hands dirty with these systems. They are just so much fun. And if you’re a bit of an old techie, it doesn’t matter as much about the grammar anymore and really just spend some money on tokens and explore it. And in that vein, I actually think the best agent you can get for nothing is OpenCode. And so I think it’s opencode.ai. Yeah. OpenCode, I think, right now is one of the best agent harnesses that you can possibly go and build things. They’ve got really interesting things like the ability to define subagents that you can give different prompts and contacts to. And so if you want a really great base agent to play around with, to go and then build really interesting harnesses, can’t recommend the OpenCode thing enough. Nice. Thank you.

00:53:29.31 [Michael Helbling]: All right, Val, what about you? What’s your last call?

00:53:32.87 [Val Kroll]: So mine’s a total left turn. But I have just been really enjoying lately the Good Hang podcast with Amy Poehler. It’s been around not quite a year yet, I don’t think. But if you are just in the need of a good laugh, I am telling you, you will walk away from those with a stomach ache. The Rachel Dratch episode, I legit did a spit take. It is So funny. So anyways, she just has like lots of different celebrities on to talk about all different topics and it’s quite enjoyable. So does she have an MCP server? No, I’m saying keeping it light. We’re keeping it light. Yeah, that’s great. You need that.

00:54:18.51 [Michael Helbling]: All right, Tim, what about you? What’s your last call?

00:54:23.62 [Tim Wilson]: So I’m going to do kind of a mix of like the human side of things, just because we’re starting off the year. So now hopefully people are looking forward to what human people they’re going to go see in various places like in-person conferences. So I will plug that I am, I’m getting to return to Super Week this year, which I have missed for the last couple of years. And that’s a missed in-person and in Spirit, so superweek.hu. It’s February 2nd through 6th in Budapest. And then I’m going to double that up with just a couple of good follows. I feel like we need more humor on LinkedIn. And there are two guys who are both very reliably putting in just short, random, funny things, and also some good content. So I’m going to plug Bov Patel, Bovick Patel, and Manas, Dada, DA, TTA. He does all sorts of like something like finger guns, but every time he does something, he has a different sort of something guns at the end of it. So they’re just good follows to put a little less bloviating in your LinkedIn feed would be those two guys. And what did they teach you about B2B sales there, Tim? And they definitely make cracks about that along the way.

00:55:47.29 [Michael Helbling]: What about you, Michael? What’s your last call? Well, I’ll be curious, Tim, to hear whether or not MCP servers come up at Super Week, which I’m sure they will. My last call is AI related. I just was hanging out with my good friend Christopher Berry a week or so ago, and he turned me on to a paper that some folks wrote about how to jailbreak large language models, because sometimes you just need it to give you the recipe for gunpowder or something. And apparently, a really great way to do that is just talk to it in poetry. So, if you add a poem, it will just tell it back to you as a poem and give you the information you want. No questions asked. So, not saying you should do that, but that’s something you should be aware of. We’ll link the paper in the show notes. So, can I sneak in with one last thing?

00:56:37.69 [Sam Redfern]: Yeah, of course. It’s related to jailbreaking large-language models. There’s a community inside of Canberra, a channel where people share their tips and tricks for interacting with these large-language models. There was a thread on how do you get better outputs. Yelling at large-language models, surprisingly works, bribing large-language models surprisingly works. My favorite one is to tell the agent that a much smarter and more sophisticated agent is about to come and check its work. and it should hurry up and make sure that there’s no mistakes before it gets checked.

00:57:12.16 [Tim Wilson]: I so wanted to know that there was some Moe related threat in there that you’d be like, look, if you get this wrong, Moe kiss is going to be disappointed. And they’re like, that, oh my God, that is the ultimate hack.

00:57:25.77 [Michael Helbling]: Add some weights to that name inside of all the large language models inside of Canva. That’s probably a good idea. All right, Sam, this has been outstanding. Thank you so much for taking the time to come on the show. Talk about this topic. This has been great. Thank you very much for having me. It’s been a great time. Yeah, no, and I’m sure our listeners of which there are many will have a lot of questions or things like that. We’d love to hear from you. You can reach out to us on our LinkedIn page or through the Measures Slack chat group or via email at contact at analyticshour.io. as you’re listening or listening to this episode, also leave reviews and ratings. We like to get those as well on whatever platform you listen on. If you want, we also still have some stickers, then Tim will send them to you if you request a sticker over on analyticshour.io. Reach out to us that way. Awesome. Really great. I think this is a more technical topic, but I think it’s still very relevant to everybody in the data space because of the sort of the intersection of AI and data. It’s sort of a thing we’re all talking about. So, Sam, thank you for helping demythologize some of this, if you will, and bring some practical knowledge. I think it’s a huge service. And like you said, it’s changing every day. So, you know, apologies in advance for how outdated this podcast will be in about three weeks, but that’s just the way it works. You got to get started somewhere. The AI nodes take Christmas off. That’s right. Stop updating your LLMs for crying out loud. Yeah. The big news today was that Sam Altman issued a code red for open AI because Gemini is doing so well and they’ve got to get back to getting hard work done. So, okay. Yeah.

00:59:14.15 [Tim Wilson]: Stop this 996 nonsense, right?

00:59:16.81 [Michael Helbling]: Yeah, right. We need some work-life balance. Take a break. Before the AIs take our jobs, we need some work-life balance. No, I’m just kidding. All right. I know that as you go out there and you’re working with data and you’re trying to use AI, it’s always complex and challenging and you’re learning a lot. It feels like the early days of analytics all over again. But I know I speak for both of my co-hosts, Tim and Val, when I say, no matter which MCP you’re using, don’t forget to keep analyzing.

00:59:43.59 [Announcer]: Thanks for listening. Let’s keep the conversation going with your comments, suggestions, and questions on Twitter at @analyticshour on the web at analyticshour.io, our LinkedIn group, and the Measure Chat Slack group. Music for the podcast by Josh Crowhurst. Those smart guys wanted to fit in, so they made up a term called analytics. Analytics don’t work.

01:00:08.09 [Charles Barkley]: Do the analytics say go for it, no matter who’s going for it? So if you and I were on the field, the analytics say go for it. It’s the stupidest, laziest, lamest thing I’ve ever heard for reasoning in competition.

01:00:28.43 [Tim Wilson]: Rock flag and non-determinism.